.:::--=:--:..

::..:--::::-:-=-:

.: :----:::. .:::..::-:

.:-. .-:.. . .: .:-

..:- .:-- . = .- .-

.-: .:--. :. -. :. ::

-- .:-: .::. .-. .: :.

:-: .:-. .:. : .::..:. -

-: .:=.::-: .:-:--:: :::

.-. .:--:.:: .. ===....

.--:-::.. :: ::

:-::--.:: .::

.::....-- :..

:: .::::::. --::.

::::-::.- .-:.::: .-:

:: :: ::.:.- .::

:. .: -. - .:

: .. : .::- .

:... ::

greetings and salutations im dylan im a 20 y/o creative technologist (cringe) based in sf.

im building lil apps on the internet rn but prev i was an ml eng at an image model startup (lexica.art) and prev prev i built a lot of goofy apps and co-founded a gen ai workflow company (bruh)

i like fashion, webgpu, digicore, black hole thermodynamics, subculture history, pytorch, cortados, pinterest, @typedfemale, @kalomaze, fleetwood.dev, leroy, slow pulp, cybersigil tatoos,...

_ .-') _ ('-. .-') _ .-') _

( ( OO) ) ( OO ).-. ( OO ) ) ( OO ) )

\ .'_ ,--. ,--,--. / . --. ,--./ ,--,',--./ ,--,'

,`'--..._) \ `.' /| |.-') | \-. \| \ | |\| \ | |\

| | \ '.-') / | | OO .-'-' | | \| | | \| | )

| | ' (OO \ / | |`-' |\| |_.' | . |/| . |/

| | / :| / /\_(| '---.' | .-. | |\ | | |\ |

| '--' /`-./ /.__)| | | | | | | \ | | | \ |

`-------' `--' `------' `--' `--`--' `--' `--' `--'

work

lexica

ml engineer (apr '24 - june '25)

this was my first real full-time job.

withdrew my college admission to join and help train a video model.

mainly did a LOT of dataset eng. built internal labeling tools, wrote distributed jobs across our H100 cluster, ran massive data collection jobs, llm filtering jobs, clip jobs, elo tournaments, etc.

learned vanilla torch >> hf transformers for hacking around with archs bc transformers is leaky asf, distributed computing, k8s, gpu power util optimization, vllm, numpy vectorization > iterrows for iterating over a df, parquet and tar stuff (so many parquet and tar files), etc.

more importantly though i picked up a lot of intuitions on how models are actually trained in real life - just a data (quality + diversity) and compute multiplier problem tbh.

learned sm from sharif (founder) about just engineering and work in general, and im so grateful to him for giving me a shot when i was 19 and had no previous ml experience.

projects

comfy workflows

(oct '23 - apr '24)

tl;dr: easily share, find, and reproduce comfyui workflows

so this is a company i co-founded with subhash, it's basically a site that lets you easily reproduce other user's gen ai workflows on your local machine.

we noticed people were using this niche oss called ComfyUI (not so niche now but back when we realized it existed they were at like 8k github stars iirc) to do stuff that you weren’t able to do in one-shot with a single model (ex: taking an image of a mountain and a river and trying to **only** animate the river).

but it was SO hard to make these workflows from scratch on your own most of the time, the way people were helping each other on places like reddit was hacky so we made the site to faciliate this basically.

we raised $75k from finc and got to tens of thousands of MAUs, a couple thousand dollars in MRR, and thousands of people in our discord server.

we were doubling every month and had a lot of fun since a lot of people were actually uploading to the site and getting value out of it.

in fact, we haven't touched it in over a year and people still join our discord server and upload workflows nearly every day (!!)

lorai

(jul '23 - oct '23)

tl;dr: train stable diffusion LoRAs in your browser

so i took the site down bc i wanted to focus on comfy workflows but basically i found out about a very efficient way (at the time) of fine-tuning diffusion models called Low-Rank Adaptation and saw how using these lightwight loras rather than a base model was so much better for generating on-style or "on-brand" images, and it was way faster than doing a full-finetune.

but the only way to train these at the time was to run py scripts or use some jank oss that really only technical people would go through the trouble of using or use an unintuitive site.

so over a weekend i built a jank v1 that was literally a form where people would upload their training data and click a button that would submit it to a db and then i would run a script to train the LoRA and EMAIL them the safetensors file 💀

but this went viral on r/StableDiffusion (twice! thrice!!) and i had this hard paywall of $5 on the v1 just to see if people would pay for it and several people did (first internet money).

so i built it out into a real site where you could train your own LoRAs very quickly and download the safetensors file or even use it to generate images within the site (no external GUI needed) and i added a pro plan, etc. and at peak i was making a little over $300/mo, incorporated, and was in talks to get a small check of $25k (but before that was finalized i started to work on comfy workflows).

was super fun and my first project that felt like it had actual motion.

flo

(jul '23)

tl;dr: a node cli tool that monitors your processes for errors and proposes solutions

you can run a process in your terminal with flo as a prefix like `flo monitor "npm run dev”` and if an error appears in your process, the cli tool catches it and tells you how to solve the error after a couple of mins, given the context of your entire codebase.

this was in the era where gpt-4 was still in a limited beta so i used langchain function agents w gpt 3.5 to read your codebase and provide the solution lol.

was cool bc the agent would read the stack trace and call a tool to grep the file where the error was, etc.

free and open source, users would provide their own openai api key.

it went semi-viral on twitter and it was fun to work on so i built a vs code extension (didn't do too bad on x either) for a more polished version a few days later.

flywheel

(feb '23 - apr '23)

tl;dr: typeform for leaderboards

this project started as a goofy site where people would fill out a form with their # of users last week vs. now to join a leaderboard for "founders" lmao.

this site was simply the waitlist for the actual site i was building which was meant to be a place where you could compete with your other founder friends (who has the most MRR, users, etc.), it was gonna be a leaderboard that's connected to your stripe and computes your Δ every week to rank you with your friends (if you were top 1 you had a "unicorn" tag if you were top 5 you were "series D", etc.)

however, users started asking me if i could build leaderboards for their own apps, so the site transformed into something like typeform for leaderboards, where users could easily create and manage multiple leaderboards, and these leaderboards could have custom logos, themes, etc. and would be hosted on dynamic routes, so they could share the link and *their* users could join their leaderboards by filling out a form.

eventually people wanted to embed the leaderboards within their own apps and wanted complex logic where the leaderboard would update based on their own app's data, so i built an npm package LOL.

eda

(jun '23)

tl;dr: natural language image editing turned video editing tool for founders (cringe)

ok so at the time i was really into screen studio which lets you screen record these like really beautiful videos with padding and gradients and borders and auto-zooming based on mouse clicks, then i thought a lot about video editing bc my flow was screen studio video -> imovie + music -> post on twitter and that got me thinking about video editing and that got me thinking about image editing and i was like we should just be able to edit images with natural language.

so i built this scrappy v1 where you upload an image, describe the edit, i prompt upscale and then send that over to a model on replicate and return the edited image, almost like a proto-4o. i posted it on x and it did pretty well.

then i added simple video gen to the site using replicate again, and then i used this sdk to create a full blown video editing ui, the idea being that i would add transitions, have templates, private "draft" links, etc. so that founders could "streamline" their process for creating video demos.

then i shipped this version that would let you upload a video and add music to it, and this grew into the last version which was like a web-based version of screen studio.

so originally i wanted a tool to edit images w natural language but the base models just weren't good enough yet, and so i moved into trying to solve a problem i actually had, sharing private draft links for video demos, quickly adding music, making cool transitions easily, etc. but eventually i just got bored with this problem.

simulacra labs

(may '23)

tl;dr: simulate generative agents in a web game

omg im remembering how fun this was, basically this was a time when i was really obsessed with langchain - i was just playing around a lot with their js and py sdks and i came across these generative agent classes, and i has just read this paper on generative agents so in a day i built this streamlit app (backend doesn't work anymore so just linking twt) that would let you spin up a simulation between multiple agents, where each "agent" was a generative agent abstraction on top of a base instruct model.

then i realized that the generative agent classes only existed in the py sdk??

turns out langchain at the time (i’m not sure of their state now) was bifurcated between the js and py abstractions, where the py lib was always ahead of the js one and they were never at parity because the py sdk was developed first, and so i TRANSLATED the generative agent abstractions from py to ts because i wanted to build a js web game with these agents and there was no way to do so since they didn’t exist in the js/ts sdk LMAO

a langchain team member even gave me a s/o on x for this lol.

then i used my translated abstractions in the js sdk to start working on a web game where the game engine was phaser and i would connect the characters to the generative agents and have them interact with each other and the environment.

i got both parts to work independtly in the web "game", i got the generative agents to work, and a very rough phaser game to work (with tilemaps and everything, etc.) but connecting these two proved to be a lot more challenging than i thought...

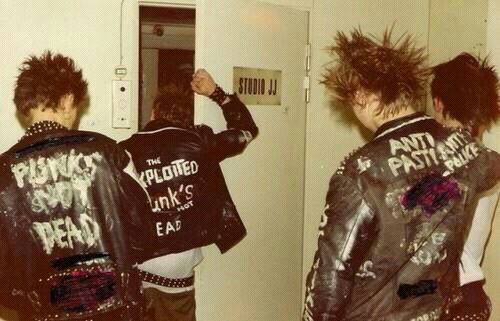

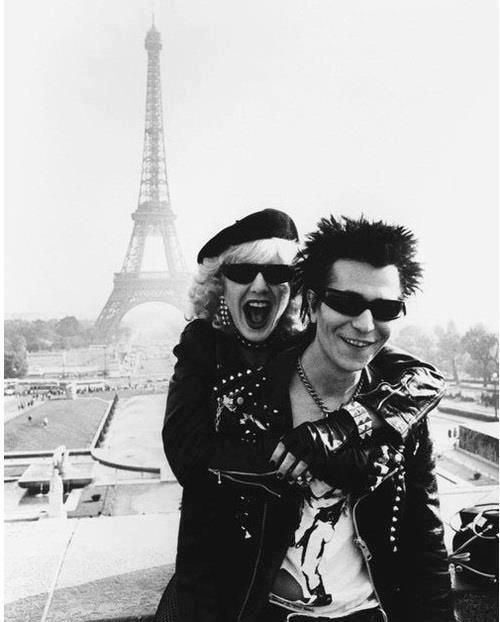

subcultures / aesthetics / cores i like

hyperpop

• Instagram photos and videos.jpeg)

the first "alt" genre i really got into. born in early 2010s uk avant-pop scene, the genre remained mostly underground until 2019 when it blew up on tiktok, peaked in 2021 as a wave of teens just started making hyperpop from their bedrooms during the pandemic and spreading it through tiktok/soundcloud - as a teen at the time i really messed with it. phased out of the mainstream by '22 and broke off into digicore/dariacore among other genres. was really formative for me during high school. very loose genre so aesthetic is all over the place.

• Instagram photos and videos.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)